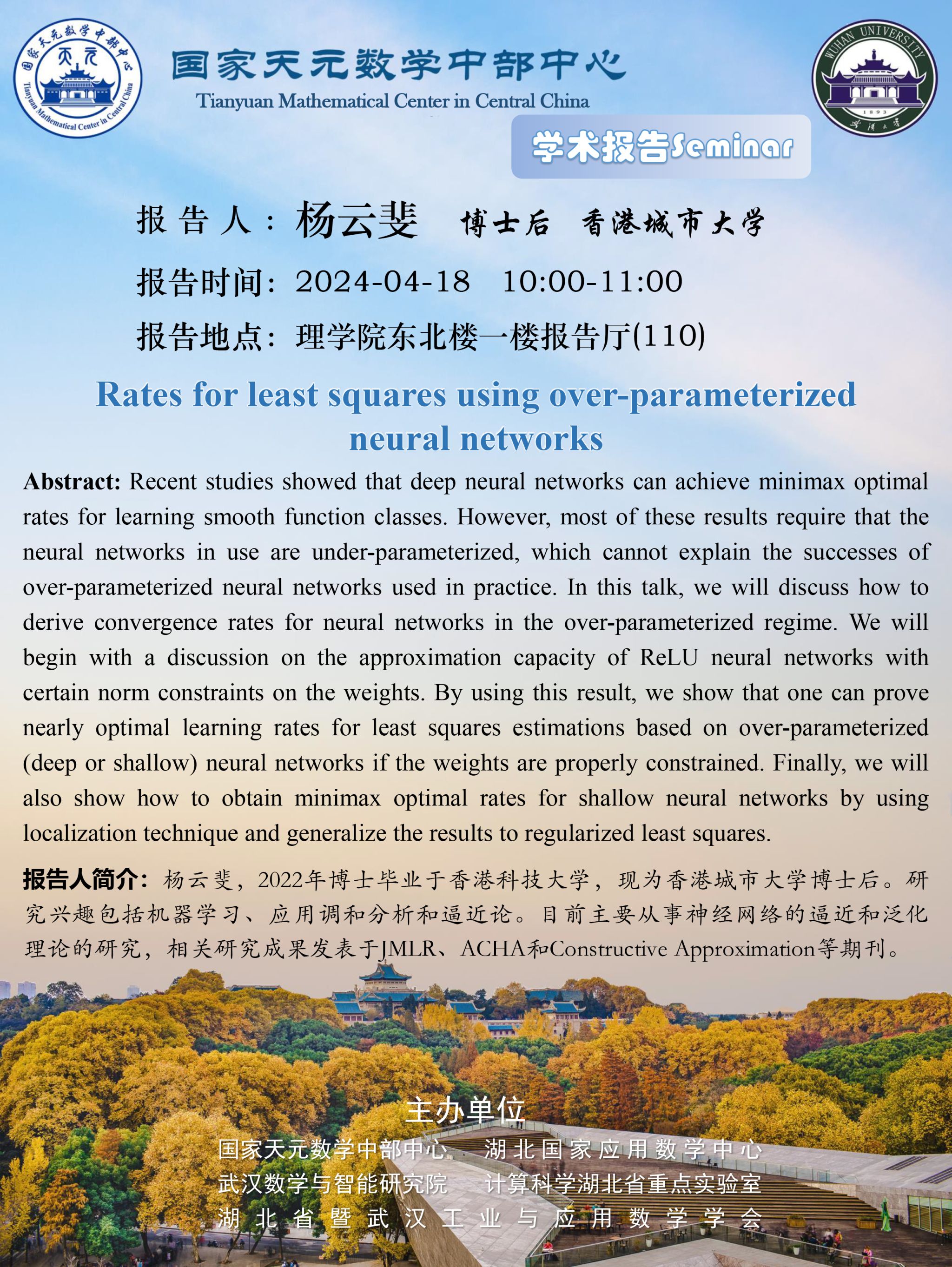

报告题目:Rates for least squares using over-parameterized neural networks

报告时间:2024-04-18 10:00-11:00

报 告 人:杨云斐 博士后(香港城市大学)

报告地点:理学院东北楼一楼报告厅(110)

Abstract:Recent studies showed that deep neural

networks can achieve minimax optimal rates for learning smooth function

classes. However, most of these results require that the neural networks in use

are under-parameterized, which cannot explain the successes of over-parameterized

neural networks used in practice. In this talk, we will discuss how to derive

convergence rates for neural networks in the over-parameterized regime. We will

begin with a discussion on the approximation capacity of ReLU neural networks with certain norm constraints on the weights. By using this

result, we show that one can prove nearly optimal learning rates for least

squares estimations based on over-parameterized (deep or shallow) neural

networks if the weights are properly constrained. Finally, we will also show

how to obtain minimax optimal rates for shallow neural networks by using

localization technique and generalize the results to regularized least squares.